Stereo Vision

1. Readings

1. ch2. Passive 3D imaging by Stephen Se and Nick Pears (Springer)

2. Multiview Geomtry by Zisserman (Part 2)

3. opencv epipolar geometry

2. Introduction

1. Readings

1. ch2. Passive 3D imaging by Stephen Se and Nick Pears (Springer)

2. Multiview Geomtry by Zisserman (Part 2)

3. opencv epipolar geometry

2. Introduction

1. ch2. Passive 3D imaging by Stephen Se and Nick Pears (Springer)

2. Multiview Geomtry by Zisserman (Part 2)

3. opencv epipolar geometry

2. Introduction

Usually Cameras are considered as passive imaging systems (here it is 3D imaging system), because it is not projecting it's own source of light or other source of electromagnetic radiation (EMR) on a scene. Where as LIDAR system can be considered as active 3D imaging system because they do the opposite, which is projecting it's own source of EMR. With increase in computer processing power and decrease in camera prices, many real world applications of passive 3D imaging systems have been emerging. Stereo refers to multiple images taken simulataneously using two or more cameras, which are collectively called stereo camera. Stereo Vision fuses the images recorded by our two eyes and exploit the differences between them giving us a sense of depth.

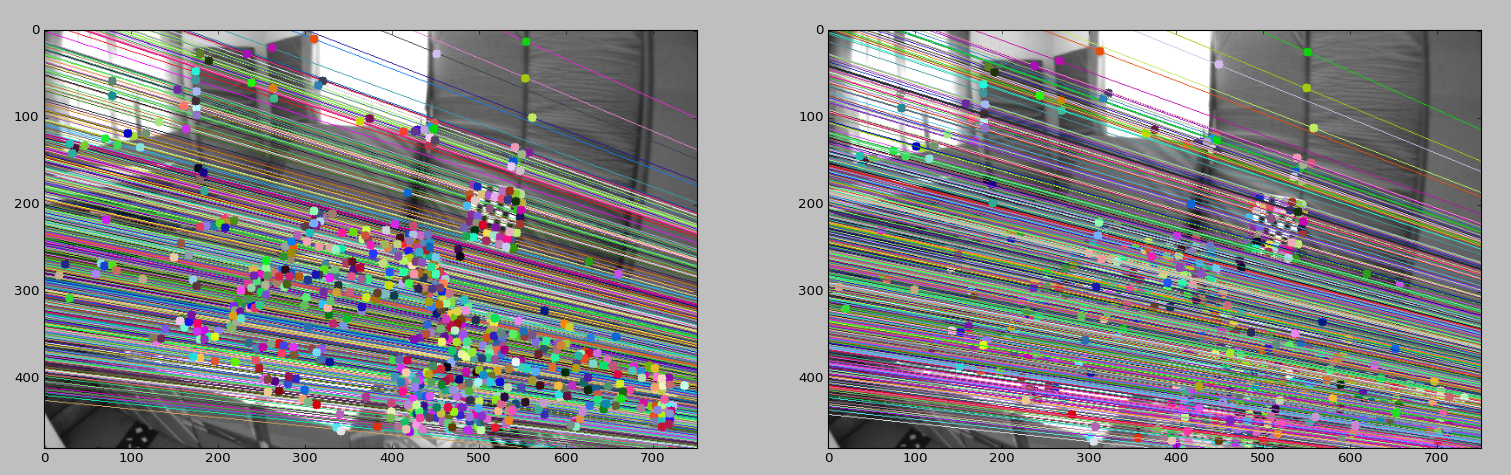

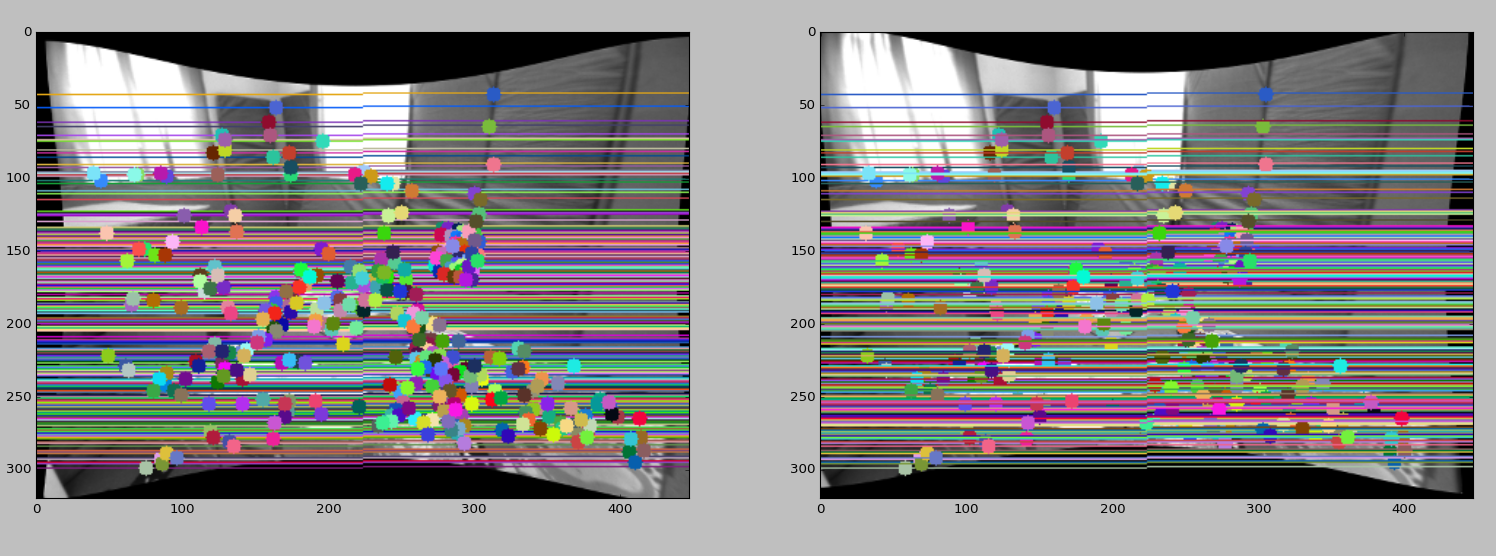

If we can determine same point on two cameras (which is called correspondence problem), then we can project two rays from the two camera planes and see where they are intersecting (called triangulation) to get the 3D information. But we need Camera parameters (which come from camera calibration) to convert 2D image to 3D rays. The difference between the position of left and right image is called Disparity.

In contrast to stereo vision, structure of motion (SfM) referes to a single moving camera scenario, where image sequence is capture over a period of time. While stereo referes to fixed relative viewpoints with synchronized image capture. In case of SfM, optical flow can be computed, which estimates the motion field from the image sequences, based on the spatial and temporal variations of the image brightness.

3. Cameras and Calibration

4. Epipolar Geometry

4. Epipolar Geometry

Epipolar Geometry establishes the relationship between two camera views. When we have calibrated cameras and we are dealing with metric image coordinates, it is dependent only on relative pose between the cameras. When we have uncalibrated cameras and we are dealing with pixel-based image coordinates, it is additionally dependent on the cameras' instrinsic parameters, however it is independent of the scene.

For 3D reconstrunction from an image pair must solve two problems:

a. Correspondence problem

b. Reconstrunction problem

Of these two, correspondence is more difficult as it's a search problem whereas stereo camera of known calibrattion, reconstruction to recover the 3D measurements is a simple geometric mechanism (using Triangulation).